AI Will Take (Half) Your Job

The real AI revolution won't be replacing humans, it will create superhumans.

By now, even my grandmother has heard of ChatGPT. It's become more than just a gimmicky chatbot – people have made it a permanent fixture of their workflows. I use it in my own day to day, to help write this blog post, craft SQL statements or generate Python scripts, write user stories, get a jumpstart on research, and countless other things.

Of course, ChatGPT is just the tip of the iceberg. The GPT API allows external platforms to prompt for more specific use cases. I've seen it used in an ETL platform to describe a transformation in natural language and watch it translate that into a programmatic set of instructions for the tool. All of these tools, especially in the hands of skilled users, makes our work faster and more efficient.

This has led a lot of people, tech workers especially, to panic that their jobs are basically dead. It's only a matter of time before this replaces them and leaves them jobless. I'll be honest, if your contribution to the team is setting the corner-radius or changing font sizes, you're probably right (but you were probably at risk anyways). But there's a host of reasons NOT to panic, and a lot of barriers that I don't think AI will ever be able to knock down.

Just like the tractor gave us superhuman abilities to grow food, and power tools gave us superhuman abilities to build, so will AI give us superhuman abilities to create.

The real AI revolution won't be replacing humans, it will be creating superhumans.

Practical Factors

If you work for any sort of tech company, you know that the most argued and fought over individual thing in the company is the roadmap. For anyone not in tech, the roadmap is just a document that explains what features are going to be built, and in what order.

Having done this for about 5 years now, and having met dozens of people who have done this waaaay longer than me, I can promise you that there's not a single company who is struggling with finding work to put on the roadmap. Every single department of the org wants something built and they absolutely must have it now.

Acceleration, not layoffs

Because of the roadmap issue above, software companies have to fight a budget/capacity battle constantly. Engineers can only push out so much good code in a fixed time frame, and it's never enough to satisfy the demand for it. Any organization who is able to figure out how to utilize AI effectively to write good code is going to use that to increase their development capacity, not to reduce their engineering spend.

Some corporations can be ruthless in managing costs, but if you know your competitor has just used AI to double their engineering capacity, are you really going to use it to reduce headcount to maintain the same capacity?

Distribution Factors

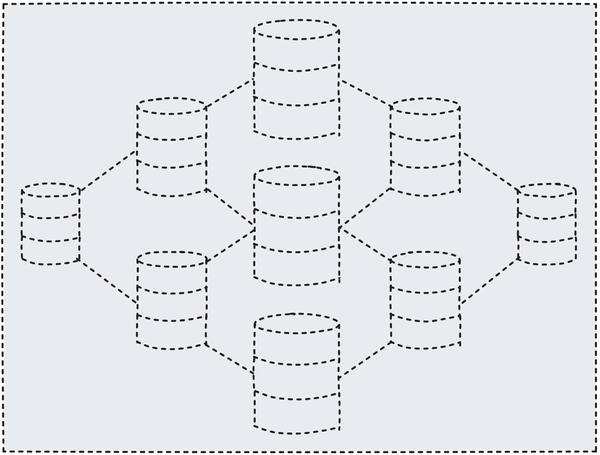

All new technology needs a distribution mechanism in order to compete effectively. It's easy for us to make sense of this for physical goods – if you make a new widget, you need some sort of supply chain infrastructure to make and sell the goods. A network of suppliers, maybe a few warehouse locations for efficient last-mile distribution, and some actual shipping contractors. How else are you going to get your product to market?

It's harder to think about the sort of distribution hurdles AI has to overcome – it's all on the internet, right? Just plug in and go!

Not so fast. There's a lot between the AI tools existing and being able to use them effectively. Are your systems capable of interacting with AI? Do you have the expertise on hand to create the handshake? Are the principals at your firms willing to take the plunge and take a risk on it?

This distribution can be represented in one of my favorite charts ever, the Product Adoption Curve.

It's not my invention, you can Google it for more information. It's a tool used by product managers to help understand the types of people that will be using their product. As of the time of this writing, we're still in the "Innovators" stage of adoption, which are people like me who like to use new technology just because it's new and shiny. However, what I really want to point to are the "late majority" and "laggards" group. These two groups represent about half of all people that will ever use a product or technology and are characterized by their resistance to change. The people on those groups are also decision makers at companies that will have the opportunity to use new technologies, but won't use them to their fullest. They'll always be skeptical of change.

No matter how useful a new technology is, you can't ignore the human factors towards the distribution, or adoption of a technology. Just like how my grandparents didn't own a computer until the late 2010's, it will take a while for this tech to move it's way through our lives.

Human Factors

Speaking of human factors, we're probably the biggest obstacle to widespread use of these technologies. Humans and computers speak and think differently. LLM's are meant to be a translation layer that helps us to interact with a computer and get it to do what we want to do. But there's still a pretty big gap.

We're not very descriptive

LLM's require the person making the request to be verbose, which means we need to fight every instinct we've every learned about communicating and overcommunicate what we want from the AI. We're not good at that. We tend to communicate with other people with an assumption of a shared experience.

I'm sure you've had that experience before, "Have you ever seen XYZ movie?" as you prepare to tell a hilarious joke, only to have the joke killed because the other person hasn't seen the movie. Because of that lack of shared experience, the other person won't get the joke.

Close your eyes for a minute. I want you to create in image in your mind of "a train moving down a track". What did you see? Was it a cartoon or a realistic image? Was it from inside or outside the train? Was it day or night? What was the weather? What geographical region? What color was the train? Was it steam, diesel, or electric? Freight, passenger, or subway? Here's a couple of random examples from Google when searching for trains moving down a track.

Your personal experiences shape that expectation, and unfortunately, for anyone seeking a specific output, the level of effort that goes into crafting the appropriate prompt is going to be frustrating. We're not very good at being verbose. While this can be learned, the average person isn't going to take the time to do it.

Value Creation

AI can be pretty good at generating things, but where it falls short is being able to decide WHAT to put out. That still requires a human. It's the discernment and decision making capabilities of a human that create the real value. So while AI might remove some of the mundane, well defined, repetitive tasks of making a vision a reality, it can't create the vision.

It still needs YOU.

Human Distrust

Most of us are a little skeptical of anything that feels "unnatural" to us. No matter how cool it is to see a self driving car, most of us are nervous to get in one. Something feels just a little off about it, a driver we can't see or have a real conversation with. In 2022, self-driving cars surpassed the human safety record for accident rate per million miles driven, meaning it's now safer to let your Model 3 drive you to work instead of doing it yourself. However, the vast majority of Americans still wouldn't ride in a self driving car.

Do you really think AI is going to be any different? Are you going to trust AI to just do your work for you, or are you going to check behind it constantly? Will you allow employees to delegate critical tasks to AI anytime soon? Doubtful.

Human Resilience

We're a hardy bunch. We don't let things keep us down. When the tractor made agrarian societies obsolete, we moved into cities and started manufacturing things. When the industrial revolution made it cheaper and easier to make more stuff with less people, we moved on to services, and then to the information age.

Do we really think that "this time it's different"? Or will we find new ways to make a living that we can't even conceive of today?

Technological Factors

Just like the distribution and human factors that are going to keep AI from making us all jobless, there's plenty of limitations of the technology itself that are going to keep it's capabilities finite. I don't even mean today's versions of AI, I mean AI in general. Here's hoping I'm not being too contrarian...

Innovation S-Curves

New innovations follow an S-Curve. There's a slow initial adoption while people figure out how to use it, before the space explodes and everyone starts to panic about how fast things are changing, before the performance ceiling is reached on the new innovation.

AI is going to be no different. While there's no way to know where that ceiling is, there is one and we're going to hit it at some point. There's only so much time and hardware to train models, and only so much publicly available data to train the models on.

AI Is Derivative

One of the key limiting factors of AI is they're not really creative. They're absolutely creative relative to an individual contributor, since the scope of what they can reference is much broader than what the human mind can retrieve at once.

However, they can only work within the confines of their training data. It's integral to how an AI is made --> Give a model some training data, and let it start making predictions against that data and seeing how close it got. Once you leave that bubble, it's taking wild guesses with no way to validate whether its guess is correct.

This means that while it can fill in gaps in human creativity (what would Old Town Road sound like if it was performed by Johnny Cash?), it can't really escape the the bounds of it's training data – it's always a derivative of something else.

This means that in order to expand the bubble of new, novel ideas, you need humans!

A Map Is Not The Territory

As humans, we often confuse the representation of something for the real thing. A map of NYC is not NYC. It's a shadow of the thing it's trying to represent, and requires interpretation in order to understand it. AI will always struggle with this problem. AI cannot experience reality the way you and I do, it only has the representation to go off of. This is one of the reasons why image generators are so bad at drawing hands, it doesn't actually know what a hand is, just what a picture of a hand looks like.

At it's core, AI is just a probability model (if you listen closely, you can hear a herd of angry AI engineers, "it's more than that!!!!"). So when you ask it to generate a picture of shaking hands, the above does fit the bill. It's statistically close to a picture of two shaking hands, but it's oddly off putting to us because it's not close at all to reality. Because we've seen hands and held or shook one before, we know what that interaction should look and feel like – it's trivial for we humans to see that something is off about that. But AI will never quite be able to solve it.

Of course, we can make attempts to make the problem better. Just give the model a 3D model of a human and let it compare a 3D rendering of the action to the output image and airbrush the image a little bit. That's a valid approach to solving that problem, but even that 3D model is just a map of the territory. Even if we got it to get good enough for what we need, it's still just one problem solved with a lot of human effort. Hardly the massive human displacement we're so concerned about.

Thinking about every nuanced problem millions of people are employed to solve every day, and you start to see why I'm skeptical that people are replaced in their jobs.

Superhuman

Before I finish, I want to stress that I don't think AI is useless, or that it's low quality garbage. It's not. This is one of the most exciting times to be in technology in the last 30 years or so.

If history teaches us anything, it's that technological innovation tends to just make us more productive as a species. Knowledge workers are long overdue for a productivity boost. For readers that have been in your industry for a long time, how has your productivity changed in the last 20 or 30 years? If you're in CRE, how many more deals are you able to execute on now than a couple decades ago? If you're in tech, are you able to ship features any faster? I have no doubt marginal gains have been made, but beyond improvements in your own skills and experience, has your ability to get knowledge work done improved exponentially? Probably not.

That's the real AI revolution. It's not replacing humans, it's making superhumans.

Will some people lose their jobs? I'm sure they will, that tends to be the way of destructive innovation. But the people who learn to embrace the revolution instead of fighting it will succeed. They will be the people to reap the benefits this new superpower is going to give them.

We're going to be okay. Humans always find ways to adapt. This time will be no different.

If you liked this article, consider following me and subscribing to email updates whenever I post an article. You can also follow me on Twitter or connect with me on LinkedIn.